High-Fidelity Generative Image Compression

About

We combine Generative Adversarial Networks with learned compression to obtain a state-of-the-art generative lossy compression system. In the paper, we investigate normalization layers, generator and discriminator architectures, training strategies, as well as perceptual losses. In a user study, we show that our method is preferred to previous state-of-the-art approaches even if they use more than 2× the bitrate.

Best viewed on a big screen.

On iPhone, to move the slider while zoomed in, tap and hold until you get haptic feedback!

Demo

Interactive Demo comparing our method (HiFiC - pronounced ˈhaɪˈfaɪˈsiː) to JPG or BPG:

Paper

NeurIPS 2020

PDF including supplementary material available on arXiv

Visual Supplementary

The PDF on arxiv includes the supplementary materials with more information.

Additional visual results are hosted as a PDF here.

All Evaluation Images

We evaluate our method on CLIC2020, DIV2K, and Kodak.

Reconstructions of HiFiC on all these datasets can be found here.

Code

Training code, code to evaluate with our models, and more details are available as part of the TensorFlow Compression repo at TFC/models/hific.

Explore our models on your own images with Colab!

User Study

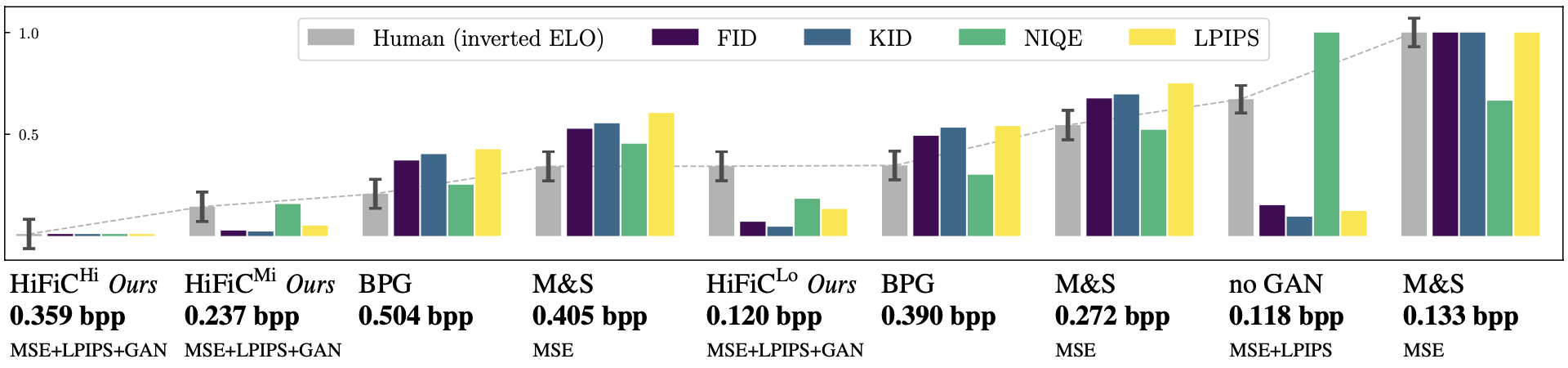

The following shows normalized scores for the user study, compared to perceptual metrics, where lower is better for all.

HiFiC is our method. M&S is the deep-learning based Mean & Scale Hyperprior, from Minnen et al., optimized for mean squared error. BPG is a non-learned codec based on H.265 that achieves very high PSNR. No GAN is our baseline, using the same architecture and distortion as HiFiC, but no GAN. Below each method, we show average bits per pixel (bpp) on the images from the user study, and for learned methods we show the loss components.

The study shows that training with a GAN yields reconstructions that outperform BPG at practical bitrates, for high-resolution images. Our model at 0.237bpp is preferred to BPG even if BPG uses 2.1× the bitrate, and to MSE optimized models even if they use 1.7× the bitrate.

Citation

@article{mentzer2020high,

title={High-Fidelity Generative Image Compression},

author={Mentzer, Fabian and Toderici, George D and Tschannen, Michael and Agustsson, Eirikur},

journal={Advances in Neural Information Processing Systems},

volume={33},

year={2020}

}